Hipaa Compliant Chatbot: Core Strategy for Secure Patient Data

Discover how a hipaa compliant chatbot protects patient data with practical security rules, vendor criteria, and common pitfalls to avoid.A HIPAA-compliant chatbot isn't just a regular customer service bot with some extra features bolted on. It’s a conversational AI tool built from the ground up to handle sensitive patient information securely, following the strict guidelines of federal law.

Think of it this way: a standard chatbot is like having a conversation in a public space. A compliant one, on the other hand, creates a private, encrypted channel for every interaction. For any modern healthcare organization, that difference is everything.

What Makes a Healthcare Chatbot HIPAA Compliant

Let’s use an analogy. A standard chatbot is like discussing your health concerns in the middle of a busy coffee shop—you never know who might be listening in. A HIPAA-compliant chatbot is the digital equivalent of a private consultation room with soundproof walls and a locked door. It's not just about what's said, but about the secure environment built around the conversation.

Using a generic chatbot to talk with patients is a massive gamble. It doesn't just open your organization up to crippling fines; it completely shatters the trust your patients have in you. This is where Protected Health Information (PHI) comes into play.

The Role of Protected Health Information

PHI is any piece of health information that can be tied back to an individual. Think of a patient's name linked to a diagnosis, treatment plan, or even their insurance details. A HIPAA-compliant chatbot is designed from its very core to protect this data.

For any healthcare provider looking to adopt new technology, a compliant chatbot is non-negotiable. Its entire purpose is to prevent PHI from falling into the wrong hands through a mix of technical safeguards, strict policies, and physical security measures.

A compliant chatbot does more than just answer questions—it’s a digital guardian for patient data. It has to encrypt every conversation, tightly control who can access information, and keep detailed audit logs of every single interaction involving PHI.

This intense focus on security is fueling incredible market growth. The global healthcare chatbot market is expected to skyrocket from USD 2,149.1 million in 2025 to an incredible USD 17,739.4 million by 2035. Why the surge? Patients want 24/7 access, and 94% of healthcare providers now view AI as a key part of their operations. You can find more data on this market expansion and what's driving it.

The Critical Difference in Practice

So, what really separates a standard chatbot from a HIPAA-compliant one? It all comes down to a few fundamental differences with huge legal and practical consequences. To make it crystal clear, let's break it down side-by-side.

Standard Chatbot vs HIPAA Compliant Chatbot

| Feature | Standard Chatbot | HIPAA Compliant Chatbot |

|---|---|---|

| Data Encryption | May offer basic encryption, but often not comprehensive. | Must encrypt PHI both in transit (moving over networks) and at rest (stored on servers). |

| Data Access | Access controls are often loose or non-existent. | Strict, role-based access controls ensure only authorized users can view PHI. |

| Legal Agreements | No Business Associate Agreement (BAA). | Vendor must sign a BAA, making them legally liable for protecting PHI. |

| Audit Trails | Logging is minimal and not designed for compliance audits. | Maintains detailed, immutable audit logs of all access and interactions with PHI. |

| Data Storage | Data may be stored on shared servers without specific safeguards. | Data is stored in a secure, isolated environment that meets HIPAA standards. |

| User Authentication | Simple or no authentication required. | Requires multi-factor authentication and secure identity verification. |

In short, a compliant chatbot isn't just a "secure version" of a regular bot. It's an entirely different class of tool, built with a security-first mindset to ensure every digital patient interaction upholds the same privacy standards as a face-to-face visit.

The Core Rules Your Chatbot Must Follow for HIPAA Compliance

To make a chatbot truly HIPAA compliant, you first have to get a handle on the rules of the road for Protected Health Information (PHI). Think of it less like a single, massive rulebook and more like a set of foundational pillars. If any one of them is weak or missing, your chatbot isn't just a security risk—it's a direct violation of federal law.

These aren't just abstract legal ideas. They're the practical, brass-tacks requirements for every secure patient interaction. They tell you what data you can touch, who gets to see it, and what you must do if that data is ever compromised. Let's break down what really matters.

The HIPAA Privacy Rule

The HIPAA Privacy Rule is all about the "what" and "why" of protecting patient data. It sets the nationwide standard for safeguarding medical records and any other health information that can be tied to an individual. Simply put, it draws a clear line around who can see, use, and share a patient’s PHI.

This is ground zero for a healthcare chatbot. It means the bot can't just go sharing a patient's lab results with an unauthorized third party or use their symptom history for a marketing campaign. Every single piece of information it collects, from a simple appointment query to a detailed description of symptoms, is protected.

The HIPAA Security Rule

If the Privacy Rule sets the boundaries, the HIPAA Security Rule provides the enforcement. This rule gets specific about electronic Protected Health Information (ePHI) and lays out the exact technical and administrative safeguards you need to have in place. It's the digital lock on the vault.

This is where the real technical work for a compliant chatbot comes in. The Security Rule isn't optional; it demands specific measures to keep ePHI confidential, accurate, and available.

Key requirements include:

- Access Controls: Making sure only the right people can access ePHI, usually through things like role-based permissions.

- Encryption: Scrambling ePHI so it's unreadable to anyone without a key, both while it's being sent over a network (in transit) and when it's sitting on a server (at rest).

- Audit Controls: Having systems in place that log and review all activity, so you always have a record of who accessed what and when.

Without these technical safeguards, a chatbot is an open invitation for a data breach.

The Business Associate Agreement (BAA)

The third, and absolutely non-negotiable, pillar is the Business Associate Agreement (BAA). This is a formal, legally binding contract between a healthcare organization (the "Covered Entity") and any third-party vendor it works with, like your chatbot provider (the "Business Associate").

This contract is everything. It legally binds the vendor to follow all HIPAA rules and makes them directly responsible for protecting any PHI their technology handles. If a chatbot company won't sign a BAA, walk away. It's the biggest red flag you can get.

A Business Associate Agreement makes your tech partners an extension of your own compliance efforts. It ensures they share the legal and financial responsibility for protecting patient data, creating a critical link in your security chain.

No BAA means you're using a non-compliant service, period. It doesn't matter how great their encryption is. The BAA is the official handshake that makes the partnership legal and secure.

The Breach Notification Rule

Finally, the Breach Notification Rule covers the "what if" scenario. This rule dictates what you have to do if a breach of unsecured PHI happens. You are legally required to notify the affected patients, the Secretary of Health and Human Services, and sometimes even the media.

Ignoring these rules comes with a heavy price—massive fines, legal headaches, and a damaged reputation that can be hard to repair. Yet many organizations are still playing catch-up. A staggering 67% of healthcare organizations are unprepared for the tighter HIPAA compliance demands hitting AI systems in 2025. This gap is especially dangerous as both federal and state regulators are introducing new laws aimed squarely at AI tools. To get ahead, you can learn more about AI compliance requirements for 2025.

Together, these four rules form the complete legal framework for any HIPAA-compliant chatbot. Miss one, and the whole structure falls apart.

The Technical Nuts and Bolts of a Secure Chatbot

Alright, we've covered the high-level rules. Now let's get into the nitty-gritty and talk about the specific technology that actually makes a chatbot HIPAA compliant. Think of Protected Health Information (PHI) like cash in a bank. The policies and BAAs are the bank's rules, but the technical safeguards are the actual vault, the security guard, and the surveillance system.

Without this tech, your policies are just good intentions. These are the digital locks and alarms that turn a basic chatbot into a fortress for patient data.

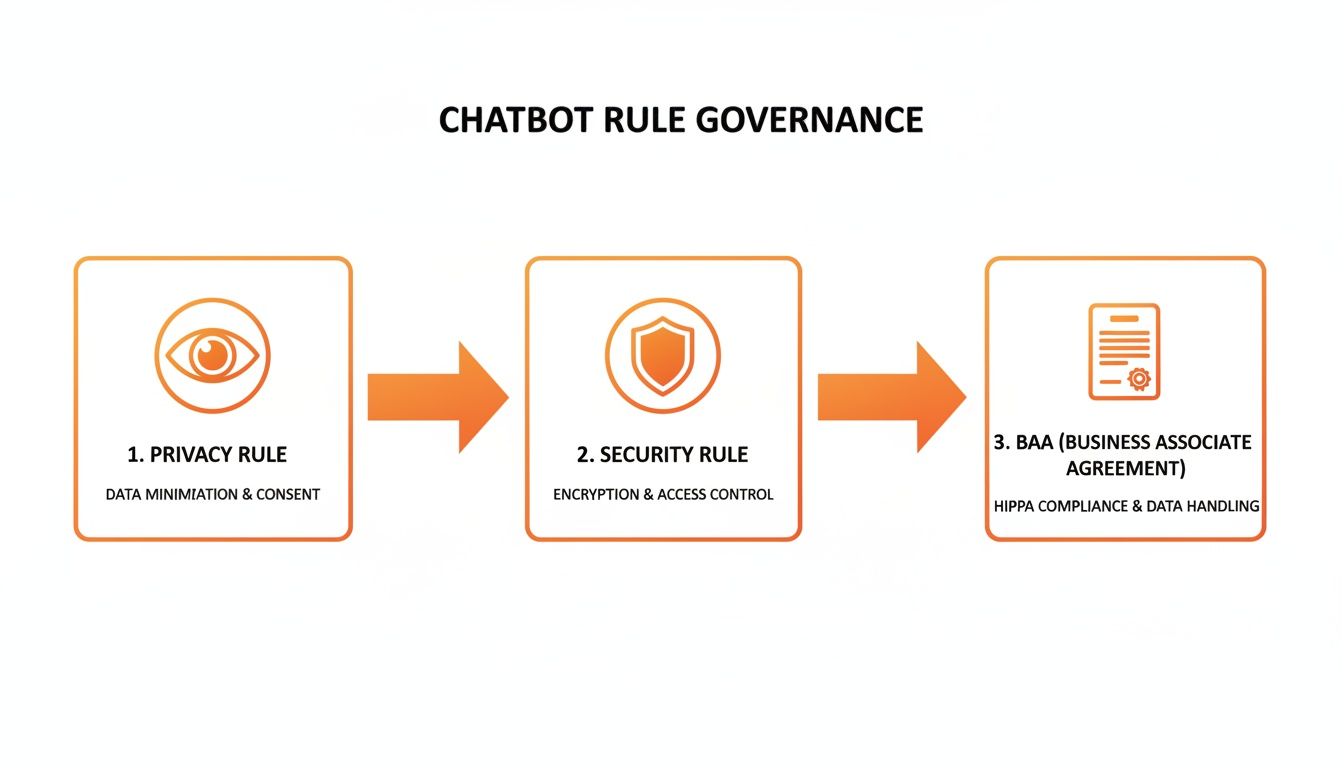

The diagram below gives you a bird's-eye view of how the core pillars—Privacy, Security, and a solid BAA—all work together to keep a chatbot in line.

As you can see, compliance isn't a single checkbox. It’s a chain of requirements, each one reinforcing the others to build a truly secure environment.

Locking Down Data with Encryption

The absolute first line of defense is encryption. It's a non-negotiable. Encryption essentially scrambles data into an unreadable code, and only someone with the right key can decipher it. A genuinely HIPAA-compliant chatbot needs to use it in two specific ways.

Encryption in Transit: This protects data as it travels between a patient's phone or computer and your servers. Think of it as sending a sensitive document in a locked briefcase via an armored truck. No one can peek at it while it's on the road.

Encryption at Rest: This is for data that's just sitting on a server or in a database. Back to our analogy, this is the document locked away in the bank vault. Even if a burglar breaks into the building, they still can't get into the vault.

You absolutely need both. Protecting data only while it's moving leaves it exposed once it arrives, which is a massive security hole just waiting to be exploited.

Putting Strict Access Controls in Place

Once your data is encrypted and stored safely, you need to control exactly who gets to see it. That's where access controls come in. They ensure that only the right people can view or handle PHI, based on a simple but powerful idea: the "principle of least privilege."

This just means people should only have access to the minimum amount of information needed to do their jobs. A billing specialist doesn’t need to see a patient’s full clinical history, and a chatbot should be configured to enforce that.

A huge piece of this is strong user authentication. A simple password isn't going to cut it. Compliant systems demand multi-factor authentication (MFA), which requires a second form of verification, like a code sent to a user's phone, before granting access.

This simple step stops an attacker in their tracks, even if they've managed to steal a password.

Keeping an Unbreakable Record with Audit Logs

The final piece of this technical puzzle is accountability. Audit logs, sometimes called audit trails, are the system's unchangeable diary. They create a detailed, permanent record of every single action involving PHI. Think of it as a security camera that's always on and whose footage can't be deleted or tampered with.

These logs must capture the crucial details:

- Who accessed the data.

- What specific information they looked at or changed.

- When it happened.

- From where they accessed it (like a specific IP address or workstation).

Audit logs are your best friend for spotting suspicious activity, investigating a potential breach, or simply proving your compliance during an official audit. If something goes wrong, these logs give you a clear digital footprint of exactly what happened, allowing you to respond quickly and effectively. Flying without them is like trying to solve a crime with no witnesses and no evidence.

Together, solid encryption, tight access controls, and detailed audit logs form the technical foundation of any HIPAA-compliant chatbot. They work in tandem to shield patient data from every angle, ensuring every interaction is secure, private, and accountable.

How to Choose the Right Chatbot Vendor

Picking the right HIPAA-compliant chatbot vendor is probably the single most important decision you'll make when you decide to bring this kind of automation into your practice. The chatbot itself is just a piece of technology; its actual compliance, security, and day-to-day reliability all come down to the company behind it.

Think of it this way: you’re not just buying a product. You’re entering into a partnership that directly affects your legal standing and, more importantly, your patients' trust.

Your first step is a simple, go/no-go test. Before you get into the weeds of features or pricing, ask one straightforward question: "Will you sign a Business Associate Agreement (BAA)?" If they pause, give you a vague answer, or flat-out refuse, the conversation is over. Period. A BAA is the legally binding contract that holds the vendor responsible for protecting PHI. Without one, you simply aren't compliant.

Once you have a firm "yes" on the BAA, the real work begins. It’s time to pop the hood and take a hard look at their security setup and how they handle data.

Evaluating Security and Compliance Credentials

You can tell a lot about a vendor's commitment to security by whether they've invested in third-party certifications and audits. These aren't just fancy logos for their website; they are concrete proof that an independent expert has put their systems through the wringer.

Keep an eye out for credentials like these:

- SOC 2 (Service Organization Control 2): This is a big one. It's an audit that reports on a company's systems and controls related to security, availability, confidentiality, and privacy. A SOC 2 Type II report is even better because it shows those controls have been tested and proven effective over time.

- HITRUST (Health Information Trust Alliance): In healthcare, this is the gold standard. The HITRUST framework combines HIPAA rules with a number of other security standards, creating a comprehensive, certifiable benchmark for protecting health information.

While these certifications aren't legally required, they signal that a vendor takes security seriously. They’ve done the heavy lifting to build an infrastructure that's designed from the ground up to protect PHI.

Key Questions for Your Vendor Checklist

To make a truly informed choice, you need to ask pointed, specific questions. Don’t let a vendor get away with fuzzy promises like "bank-grade security." You need details.

Here’s a practical checklist to use in your vendor conversations:

- Data Encryption: How is our data encrypted, both when it's moving (in transit) and when it's stored (at rest)? What specific standards, like AES-256, are you using?

- Access Controls: Show me your role-based access controls. How do you make sure only the right people can see sensitive information? How do you enforce multi-factor authentication for your own team?

- Data Storage and Segregation: Where, physically, will our data be stored? If it’s a shared environment, how do you guarantee our data is completely walled off from other clients' data?

- Audit Trails: Can we get detailed logs showing who accessed patient data and exactly when they did it? How long do you keep these records?

- Breach Notification Plan: Walk me through your documented process if a data breach affecting our practice occurs. How quickly will we be notified?

- Employee Training: How do you train your own employees on HIPAA and your internal security protocols?

Your chatbot vendor isn't just a software provider; they are a custodian of your patients' most sensitive information. Their security posture is a direct extension of your own, making thorough vetting an essential risk management step.

This kind of rigorous evaluation is especially critical for smaller practices. There's a noticeable resource gap in AI adoption; in 2024, large hospitals hit 96% AI adoption, while smaller ones were only at 59%. Choosing a vendor with a pre-built, robust compliance framework can help level that playing field. To see the full picture, you can explore the full report on AI adoption in hospitals.

This is where platforms built with security in mind from day one, like CallCow, really shine. They are designed to handle all these complex technical safeguards right out of the box. This allows clinics of any size to deploy advanced AI voice agents without the massive headache and expense of building a compliance framework from scratch. It's not just about saving time and money—it's about the peace of mind that comes from knowing patient data is being handled the right way from the very first call.

Putting Compliant Chatbots into Practice

It's one thing to understand the rules and technical safeguards, but it’s another to see them in action. A HIPAA compliant chatbot isn't just about avoiding fines; it's a practical tool that can genuinely improve how a healthcare practice runs day-to-day. When set up right, these bots take over the repetitive, administrative grind, freeing up your team to focus on what really matters: patient care.

So, let's look at a few real-world examples where a compliant chatbot can make a huge difference.

Secure Appointment Scheduling

Automating appointment scheduling is often the first, most obvious win. It’s a task that eats up a ton of time for front-desk staff, who are often stuck playing phone tag and juggling calendars. A well-built chatbot handles this entire process from start to finish.

Here’s what a compliant workflow looks like:

- Patient Reaches Out: A patient starts a chat on your website or patient portal to book a visit. The bot instantly opens a secure, encrypted channel for the conversation.

- Identity Check: Before any Protected Health Information (PHI) is shared, the bot needs to know who it’s talking to. It might ask for a date of birth and a unique patient ID, which it checks against your secure Electronic Health Record (EHR) system.

- Find a Time: Once the patient is verified, the bot securely pulls the clinic's availability. It shows the patient open slots, and they can pick what works best for them, all inside the encrypted chat.

- Confirm and Done: The appointment is booked directly into the EHR, and the patient gets an instant confirmation. No sensitive information ever leaves the secure environment.

The secret sauce here is that the entire interaction happens within a protected bubble. The chatbot acts as a secure go-between, automating a crucial task without putting patient data at risk.

This doesn't just give your staff their time back; it gives patients a way to book appointments 24/7, which is a massive upgrade to their experience.

Verified Prescription Refills

Refill requests are another perfect job for a compliant chatbot. This workflow is even more sensitive because it involves medication details, so the identity verification has to be rock-solid.

A secure process for refills typically involves:

- Secure Sign-In: The patient logs into the patient portal, which acts as the first strong layer of authentication.

- Request and Review: The patient asks the bot for a refill. The chatbot then checks their records to see which prescriptions are eligible for renewal.

- Extra Verification: For an added layer of security, the bot might text a one-time code to the patient’s phone on file. They enter the code to prove it’s really them.

- Secure Routing: Once confirmed, the request is sent securely to the clinical team for approval and then forwarded to the patient’s pharmacy through an encrypted channel.

This automated flow cuts down on phone calls and creates a clean, verifiable trail for every request, reducing the chance of errors while staying firmly within HIPAA guidelines.

Private Patient Intake

The pre-visit ritual of filling out forms can be tedious. A HIPAA compliant chatbot can handle the intake process before the patient even sets foot in the clinic, gathering medical history, symptoms, and insurance information privately and efficiently.

The bot simply guides the patient through a series of questions in a secure chat session. The answers are encrypted and sent straight to the patient’s chart in the EHR. When the patient arrives, the clinical team already has everything they need for a more focused and productive visit.

Tools like CallCow are built to manage these kinds of secure, automated interactions. By using compliant AI voice agents, practices can handle scheduling, reminders, and intake questions over the phone, with every conversation logged and protected. It makes sophisticated automation a real possibility without ever having to compromise on patient privacy.

Common Implementation Mistakes You Must Avoid

Rolling out a HIPAA-compliant chatbot is a huge step forward, but the path from idea to a live, secure tool is littered with potential missteps. Getting it wrong can lead to serious compliance violations, wasted money, and, worst of all, a breakdown in patient trust. Knowing where others have stumbled is the best way to avoid making the same mistakes yourself.

The absolute biggest mistake you can make is launching a chatbot without a signed Business Associate Agreement (BAA) from the vendor. This isn't just a piece of paperwork; it's a non-negotiable, legally-binding contract. Without it, you’ve committed a clear HIPAA violation right out of the gate, leaving your practice completely exposed.

Overlooking Staff Training and Handoffs

Another pitfall is assuming the chatbot can do everything on its own. Your team needs to be trained on exactly how it works, what its limits are, and—most importantly—when and how to jump into a conversation. A chatbot without a clear handoff plan to a real person is just a frustrating dead end for your patients.

Think of it as a digital front desk assistant. They can answer common questions, but they need to know precisely when to buzz a nurse or doctor. Without that clear protocol, you’ll only create confusion and mishandle sensitive patient needs.

Using Non-Compliant Consumer Tools

It’s tempting to grab a familiar, off-the-shelf AI tool, but this is a shortcut you can't afford to take. Standard consumer platforms or messaging apps simply weren't built for healthcare. They're missing the core security features HIPAA demands, like end-to-end encryption, strict access controls, and detailed audit logs.

Using a generic AI tool for patient communication is like sending sensitive medical records on a postcard. It completely ignores patient privacy, opening your organization up to massive fines and reputational damage.

Finally, don't overlook system integration. If your chatbot doesn't connect securely and smoothly with your core systems, like your Electronic Health Record (EHR), you're creating dangerous security gaps. This can lead to fragmented data and new vulnerabilities.

The good news? Every one of these mistakes is avoidable with a solid plan. If you approach this implementation with the same care you give a clinical procedure, your chatbot will be a compliant, effective, and trusted resource from day one.

Answering Your Top Questions

As you start exploring HIPAA-compliant chatbots, a few common questions always seem to pop up. Let's tackle them head-on so you have the clarity you need.

Can a Chatbot Using a Large Language Model Be Compliant?

Yes, but this is a big one. You can't just plug a public Large Language Model (LLM) like the ones from OpenAI into your website and call it a day. That's a huge compliance risk.

To make it work, the model has to live in a private, secure environment that's built for HIPAA. Crucially, you need a signed Business Associate Agreement (BAA) with whoever is hosting that model. This setup guarantees that no Protected Health Information (PHI) ever touches public servers or gets used to train the AI.

What Happens If a Data Breach Occurs?

This is where your BAA becomes incredibly important. If your chatbot vendor has a security breach that exposes patient data, the HIPAA Breach Notification Rule gets triggered. Your BAA should spell out exactly what happens next, starting with the vendor notifying you immediately.

Once you get that notification, the clock starts ticking. Your organization is then legally on the hook to:

- Tell all affected patients about the breach without unnecessary delay.

- Formally notify the Secretary of Health and Human Services.

- Depending on the scale of the breach, you might even have to alert major media outlets.

Do Patients Need to Give Explicit Consent?

HIPAA doesn't demand a special consent form just for using a chatbot, but being upfront is non-negotiable. You have to tell patients they're talking to a bot, not a person. You also need to make your Notice of Privacy Practices easy for them to find.

A good rule of thumb is to get a quick acknowledgment before the chat starts. This ensures patients know how their information is being managed.

What truly matters is that any PHI collected is only used for treatment, payment, or healthcare operations, just as it would be during an in-person visit.

The cost for a compliant solution can vary quite a bit. It really depends on how much custom work you need, how deeply it needs to connect with your EHR, and the specific features you're looking for.

CallCow provides AI voice agents built on a secure foundation, enabling healthcare practices to automate calls for appointments and reminders while adhering to strict privacy standards. Discover how to implement a secure, compliant solution by visiting the CallCow website.